Two hours at Duke revealed something most companies are missing: the future of AI isn't being built in boardrooms—it's being built by grad students who ask better questions than your strategy team.

The $600B Reality Check

DeepSeek just proved America's AI dominance isn't permanent, erasing $600 billion from Nvidia overnight. While DeepSeek wasn't U.S.-trained, here's what should terrify you: We educate 57% of the world's AI PhDs, then force them to leave.

These aren't rejects—they're future founders desperate to stay. Instead, they face 15% visa lottery odds and century-long green card backlogs. China's Thousand Talents Plan has already poached 7,000+ researchers. The next DeepSeek? It could absolutely be built by someone Stanford-trained and America rejected.

Three Problems Destroying Our Edge

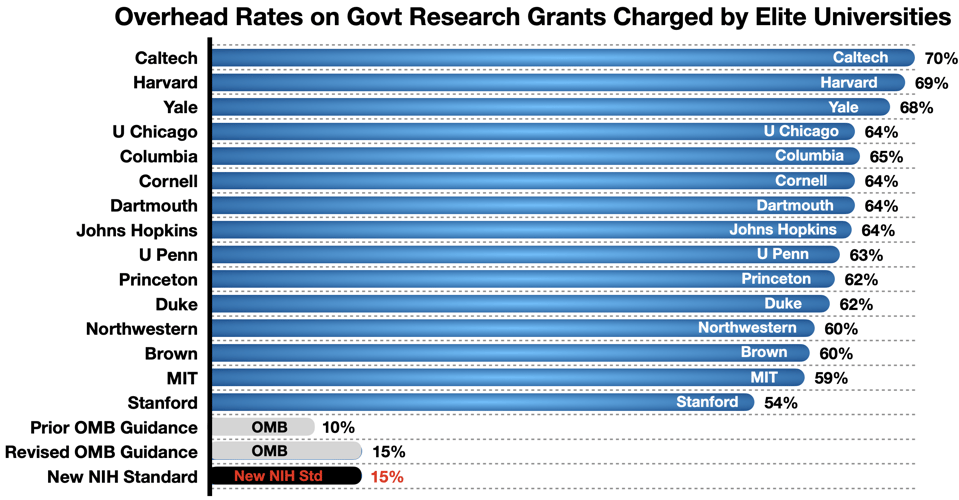

1. The 60% Innovation Tax: Harvard, Yale, and Johns Hopkins charge a 60%+ overhead rate on research grants. Most funding feeds bureaucracy, not breakthroughs.

2. Immigration Theater: Brilliant PhDs who want to build America's future face a Kafkaesque visa system. The pipeline that gave us Satya Nadella and Ilya Sutskever now sends talent home by bureaucratic exhaustion.

3. Academia's Identity Crisis: Students want to build companies, not write papers. But most programs still optimize for citations over shipped code.

The Fix Exists—We Just Won't Do It

Staple green cards to STEM PhDs from accredited universities

Cap research overhead at 30% with full transparency

Fund builders, not paper-pushers—Berkeley, CMU, and Stanford already proved this works

Why This Matters Now

Academia saved AI once before. When the field became toxic in the 1980s, professors like Hinton, LeCun, and Bengio kept building in obscurity. Their students founded OpenAI, transformed Google, and built Meta's AI—turning "failed" research into trillions in value.

Today's students aren't asking "How do I publish?" They're asking "How do I start an AI company?" They get it.

The question is whether America will keep them, or watch them build the future for someone else.

🎙️ AI Confidential Podcast - Are LLMs Dead?

🔮 AI Lesson - AI as Your Career Accelerator

🎯 The AI Marketing Advantage - Your Meta AI Chats Are About To Power Ads

💡 AI CIO - The Paradox of AI Reports

📚 AIOS - This is an evolving project. I started with a 14-day free Al email course to get smart on Al. But the next evolution will be a ChatGPT Super-user Course and a course on How to Build Al Agents.

Join senior executives & enterprise leaders for Charlotte’s largest AI conference, AI // FORWARD. Two days of strategic frameworks, operational playbooks, and peer-to-peer exchange on scaling AI from pilots to productivity.

Seats are strictly limited and selling fast. Don’t miss your chance!

What I Learned About AI from Two Hours in College

I spent two hours at Duke University — and the future of AI looked surprisingly bright.

Last week I was invited by my friend Jon Reifschneider, who leads Duke’s Master of Engineering in AI for Product Innovation program, to speak with his students.

I was genuinely excited; teaching at a university has been a long‑standing goal. What I realized, quickly, was not only how smart these students were—but also how curious they were.

Their curiosity wasn’t abstract. They weren’t just studying—they were building. Their questions were also a lot more entrepreneurial than I expected.

How do I start an AI company?

How can I start an AI side-hustle?

How does AI play into the open-source ecosystem?

How do we turn AI into something that matters?

It was encouraging to see them plying their trade, a big difference from the theoretical approach I was expecting. They had started their conversation by describing how materials science students had paired with AI students to help accelerate research in applied materials.

This was encouraging. I believe that the most important part of the AI revolution is the building of skills and understanding to use this powerful tool for solving problems that make the world a better place, by addressing climate change, curing cancer, and ending poverty and food insecurity. But that all requires a system that builds and retains talent.

After my hour-long talk, students stayed for another hour of questions—many of them international students who desperately want to build their careers here but face an uncertain future.

Standing on that historic campus, watching these builder-students who might be forced to take their talents elsewhere, I realized we're about to repeat history's biggest mistake. Most people don't realize that academia has saved AI before—and we're now dismantling the very system that made American AI dominance possible.

AI in Academia: From Dorm Rooms to Boardrooms

Artificial Intelligence was born in academia, nearly died in academia, and was resurrected in academia before escaping to conquer the world. The story begins in 1956 at Dartmouth College, where John McCarthy convinced the Rockefeller Foundation to fund a summer workshop with an audacious premise: that "every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it."

This wasn't a corporate R&D lab or a military project—it was professors in a New Hampshire college town declaring they would crack the code of thought itself. For two decades, university AI labs rode high on this confidence, with MIT, Stanford, and Carnegie Mellon promising thinking machines were just around the corner.

Then came the AI winters. When AI failed to deliver on its grand promises by the mid-1970s, funding evaporated first from the very universities that had championed it. The term "Artificial Intelligence" became so toxic in academic circles that professors literally couldn't get grants with those words in their proposals. Today, we face a different kind of winter: not a winter of ideas, but a winter of talent. The same universities that kept AI alive are now watching their best minds leave—not because the research failed, but because our immigration system is failing them.

By the 1990s, the same researchers who had proudly called themselves AI scientists were rebranding as anything else—"machine learning," "statistical inference," "pattern recognition," "computational intelligence." Graduate students learned to describe their neural network research as "parallel distributed processing" to avoid the career suicide of being associated with AI. Universities that had built entire AI departments quietly folded them into computer science or cognitive science programs. The field hadn't died; it had gone into academic witness protection.

But academia was also where the AI resurrection began. While industry had given up on neural networks, a stubborn cohort of professors kept pushing: Geoffrey Hinton at Toronto working on deep learning despite ridicule, Yann LeCun at NYU advancing convolutional networks, Yoshua Bengio at Montreal refusing to abandon the approach. Their students—trained during AI's wilderness years—carried these "machine learning" techniques into tech companies just as computational power finally caught up to their ambitions. When AlexNet won ImageNet in 2012, it was Hinton's team (including Ilya Sutskever, co-founder of OpenAI) from the University of Toronto.

When transformers revolutionized language models in 2017, the breakthrough came from researchers trained in those same academic labs that had weathered the AI winters. Suddenly, universities that had spent two decades hiding from the term "AI" were racing to establish AI institutes, and "machine learning" professors were comfortable calling themselves AI researchers again.

The technology that now powers enterprise strategies and sparks boardroom discussions—it all started with graduate students in university basements during AI's darkest days, publishing papers on "statistical learning" while secretly believing they were building intelligence. The same academic institutions that gave AI its name, suffered through its winters, and camouflaged it as machine learning, ultimately provided both the intellectual foundation and the human talent that made modern AI possible.

When ChatGPT launched and made AI a household term again, it was the culmination of decades of academic persistence—from Dartmouth's ambitious summer to Toronto's neural networks, from Stanford's rebranded statistics to MIT's resilient robotics labs. Academia didn't just incubate AI; it kept it alive when everyone else had given up.

Bridging Academia and Industry: Two Critical Challenges for American Innovation

The contrast couldn't be starker. While those pioneering professors fought to keep AI research alive despite zero support, today's students have all the technological resources they need—but face two devastating barriers that threaten to undo everything academia has built.

The Research Funding Paradox

The first challenge centers on how academic research gets funded and the increasingly problematic role of indirect costs. When students asked about funding, it was pretty clear that there needed to be better incentive structures and lower Facilities & Administrative (F&A) rates which fundamentally shape these relationships. These F&A rates—the percentage universities charge to cover indirect costs on federally funded research—have grown so substantially that they now often eclipse the research funding.

The federal government recently halted funding for many grants, citing these excessive overhead rates as a key concern. The NIH has pushed back forcefully, noting that reimbursement rates averaging 27-28% have crept dangerously high, with elite institutions like Harvard, Yale, and Johns Hopkins each claiming more than 60% in overhead. This means that for every dollar intended for groundbreaking research, the majority goes to administrative and facility costs rather than the science itself. Incentives drive behavior, and only transparency and reasonable cost structures will attract the industry partnerships essential for innovation.

Source: George Calhoun, Forbes

But even if we fix research funding, it won't matter if we can't keep the people doing the research. And that brings us to an even more urgent crisis—one that several Duke students pulled me aside to discuss privately.

The Talent Retention Crisis

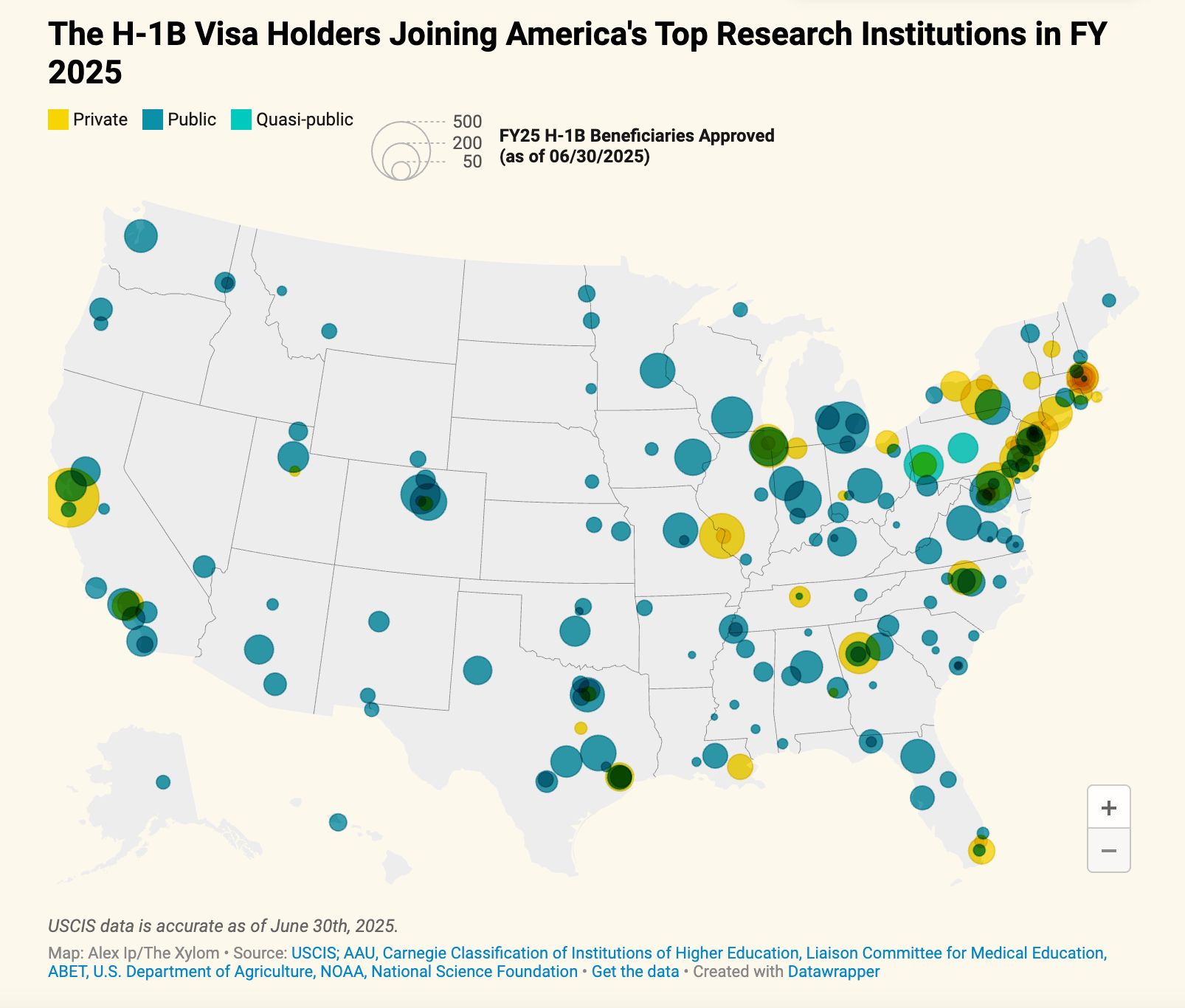

The second, perhaps more urgent concern involves our self-defeating immigration policies, particularly regarding H-1B visas. Many of Duke's brightest international students—the very people we need to maintain America's technological edge—see their future in the United States as increasingly uncertain.

This visa debate exposes a fundamental contradiction: America operates the world's premier talent development program for our strategic competitors. International students earn over one-third of STEM doctorates from U.S. universities, with the percentages rising to 49% for master's degrees and 57% for doctorates in AI and machine learning. Yet after investing in their education, we force them through a byzantine immigration system featuring H-1B lottery odds of just 15-35% and, for Indian nationals, green card backlogs stretching beyond a century.

Source: The XYLOM

Meanwhile, China actively courts these same graduates through initiatives like the Thousand Talents Plan, which has successfully recruited over 7,000 researchers, including more than 150 scientists from Los Alamos National Laboratory. Every AI researcher who takes their American education to Beijing or Bangalore represents not just a loss of talent, but a direct transfer of competitive advantage to our rivals.

Without immigration, leading AI minds like Yann LeCun (Meta), Satya Nadella (Microsoft), Geoffrey Hinton (Google), Ilya Sutskever (OpenAI), Fei-Fei Li (Stanford HAI), Demis Hassabis (Google DeepMind), and numerous others might end up working abroad and not in the United States.

The result? Top talent trained at Duke, MIT, Stanford, and Carnegie Mellon takes their expertise directly to Beijing, Bangalore, or London, accelerating our competitors' AI capabilities.

The solution isn't complicated, but it requires political will. We need a friction-free pathway for STEM graduates from accredited U.S. universities to remain and contribute to American innovation. This isn't opening floodgates—it's the strategic retention of talent we've already invested in developing. In an AI arms race where breakthroughs can reshape entire industries overnight, forcing our best-trained minds to compete against us is nothing short of unilateral technological disarmament.

The Programs Getting It Right—And Why It Won't Matter If We Can't Keep The Talent

A decade ago, most university “AI” activity looked like papers and prototypes. Today, the programs worth watching give students enterprise‑grade access to models, compute, and data—then expect deliverables.

Just as important, several programs are organized around deliverables — not just grades:

Berkeley’s Data‑X structures projects around “validate, design, build,” with demos that look like a venture studio’s pipeline, not a problem set archive. And it encourages participation by students, researchers, and companies. It’s AI for everyone hosted in academia.

Carnegie Mellon capstones are sponsored by companies with proof of concepts expected under faculty supervision. (CMU School of Computer Science)

Stanford’s RegLab builds and deploys AI systems with government agencies (e.g., the Statutory Research Assistant — STARA), proving that “applied” can mean deployed in real bureaucracies.

These programs aren't just teaching AI—they're creating founders. But here's the tragedy: many of their best students, particularly international ones, will be forced to create their companies elsewhere. We're running the world's best startup accelerator for our competitors.

DeepSeek Is Just the Beginning—Unless We Act Now

The DeepSeek incident demonstrated how quickly emerging AI capabilities from rival nations can rattle our markets and challenge our technological leadership. When DeepSeek revealed its ChatGPT-like AI model operating at a fraction of the cost of U.S. models, Nvidia lost nearly $600 billion in market value—the most any stock has ever lost in a single day. Every AI researcher we train and lose potentially narrows our competitive advantage in what is effectively an AI arms race.

The students I met at Duke are entering a new era: AI isn't about papers anymore—it's about products. They're already building. But if we keep forcing them to choose between their dreams and their visas, they'll build those dreams elsewhere.

Academia saved AI once by harboring it through the winter. Now we need to save ourselves by keeping the talent that academia produces. The formula is simple: curiosity plus access plus retention equals dominance. Remove any element, and we hand the future to someone else.

Those Duke students asking about starting AI companies? In a sane system, they'd be our next unicorn founders. In our current system, they might be building those unicorns in Beijing or Bangalore instead. The clock isn't just ticking—it's screaming.

One of the newsletters we publish as part of The Artificially Intelligent Enterprise network is AI CIO by theDevOps Legend, John Willis. His latest book Rebels of Reason is a collection of the stories that run from the early days of Turing, Minsky, and McCarthy to the Altmans, Hintons, and Sutskevers of today.

Willis does a great job of finding the connective thread that has led to what will likely be named one of the most significant technology breakthroughs in history.

One topic that came up a few times was what the outlook was for entry-level coding jobs. I think this is a tough question; while there are plenty of tools to write code, there’s still no good substitute for the experience of deploying apps at scale. Typically, developers start their career with simple projects, learning coding methods and then debugging them and gaining the experience to deploy that code at scale. While we have a lot of tools to develop software, we are likely creating a gap between the development of software and the best practices for deploying secure, scalable software. Here are some ideas on how to solve that problem.

Vercel v0 — Turn prompts or sketches into working React/Next.js components and pages, with instant preview deployments. One click promotes a reviewed build to production, so juniors learn shipping, not just scaffolding.

Streamlit — Convert Python scripts and notebooks into shareable web apps in minutes. Ideal for data/LLM prototypes where you need stakeholder feedback fast and a simple path to hosted demos.

Cursor — An AI‑native code editor with repo‑aware chat that drafts tests, refactors safely, and explains diffs. Great for accelerating onboarding and producing PR‑ready changes with context.

Windsurf — An agentic IDE that executes goal‑driven, multi‑file edits across a codebase. Useful for scaffolding services and applying cross‑cutting concerns like auth, validation, and logging.

Bolt.new — A browser‑based full‑stack environment (WebContainers) that runs real Node/React apps with zero local setup. Share a live URL for reviews, then export to your repo for CI/CD.

Base44 — A natural‑language, no/low‑code builder that assembles full apps and internal tools quickly. Use it to validate workflows with business users, then harden the critical paths in your standard stack.

My suggestion is to work on creating apps with tools like these but then get good at troubleshooting them as you roll them into production. Even if it’s a single-user app, these are the skills that we are at risk of losing if we don’t develop them.

Prompt of the Week: Using AI to Study and Learn

ChatGPT's Study Mode, introduced in July 2025, is a learning experience that helps you work through problems step by step instead of just getting an answer. The feature aims to help students develop their own critical thinking skills, rather than simply obtain answers to questions.

You don’t have to be enrolled in college, though, to learn AI. And this feature can be applied in various ways. Upskilling for your business, or a way for your kids to learn interactively and at their own speed. Here’s how it works:

Socratic Method: Instead of generating immediate answers, Study Mode acts more like a tutor, firing off questions, hints, self-reflection prompts, and quizzes that are tailored to the user and informed by their past chat history.

Adaptive Learning: ChatGPT gauges your level and remembers past sessions to meet you where you are.

Progress Tracking: Features goal setting and progress tracking across conversations to help monitor learning development.

Knowledge Checks: Quizzes and open-ended questions show what's sticking, with feedback to strengthen weak spots.

Here's a comprehensive prompt you can use with ChatGPT's Study Mode to start learning prompt engineering. Something most of you are interested in, but it can easily be adapted to other fields of study.

I want to master prompt engineering for AI language models. I'm a beginner and would like to learn this systematically. Please guide me through understanding:

1. The fundamental principles of how language models interpret prompts

2. Key techniques for writing effective prompts

3. Common mistakes to avoid

4. How to iterate and refine prompts

Start by testing my current understanding, then help me build knowledge step-by-step. After each concept, give me a practical exercise to apply what I've learned. Don't just give me the answers - help me think through the problems myself.

My goal is to be able to write prompts that consistently get accurate, relevant, and useful responses from AI models. Where should we begin?

Bonus Prompt: Tutor for Students

Many parents want to help their kids study but it’s been a long time for many of them since they had algebra, history, or science classes. But you can still help them by using Study and Learn in ChatGPT. Here’s a prompt to use to help them learn when they don’t have access to a human tutor. Just fill in the boxes in square brackets.

I'm a [grade level] student working on my [subject] homework about [specific topic]. I want to truly understand this material, not just get the answers.

Please act as my personal tutor and:

1. First, check what I already know about this topic by asking me 2-3 questions

2. Help me understand the core concepts before we tackle the problems

3. When I'm stuck, give me hints instead of answers

4. Explain things in a way that makes sense for someone my age

5. Use real-world examples I can relate to

6. After we work through problems, quiz me to make sure I really get it

Here's what I'm working on: [paste homework question or describe the assignment]

My current understanding level: [beginner/some knowledge/confused about specific parts]

What I find challenging: [specific areas of difficulty]

Please guide me step-by-step and make sure I understand the "why" behind each concept. If I give a wrong answer, help me figure out where I went wrong instead of just correcting me.

Let's start with making sure I understand the basics before we dive into the homework problems.

I appreciate your support.

Your AI Sherpa,

Mark R. Hinkle

Publisher, The AIE Network

Connect with me on LinkedIn

Follow Me on Twitter