How to use enterprise data with AI, is one of the biggest use cases for AI right now. Today, I give you the overview on how to do that with RAG (Don’t worry the first part of the explanation is accessible to anyone). Also, OpenAI, Microsoft, and Google released a ton of AI news this month and it will start to trickle down into pilots and larger scale implementations. I also made you a little rap using AI, make sure to make it to the end for this week’s bonus content.

Thank you for subscribing; it means a lot to me. Enjoy this week’s edition.

Sentiment Analysis

We're experiencing a rapid expansion in generative AI capabilities, fundamentally changing technology. These advancements are no longer occasional breakthroughs but a steady flow of progress, transforming industries and redefining our understanding of machine potential. As this technology evolves, it presents new opportunities and challenges, indicating a shift toward an AI-driven future that requires our attention and adaptation. Steven Levy at Wired does a deep dive into the advancements in AI in his article, It’s Time to Believe the AI Hype.

AI TL;DR

Generative AI News, Tips, and Apps

OpenAI created a team to control ‘superintelligent’ AI — According to a source from the Superalignment team at OpenAI, which is responsible for developing ways to govern and steer "superintelligent" AI systems, the team was promised 20% of the company's compute resources. However, requests for a fraction of that compute were often denied, preventing the team from doing its work.

Slack is training its machine learning on your chat behavior—unless you opt-out via email. A post on X revealed that Slack is using its customer data to train “global models,” which it uses to power channel and emoji recommendations and search results. Why should you care? We need to be aware of this issue if we provide private data to our SaaS vendors. Think about things where you are doing taxes or providing private data.

AI’s Trust Problem: Twelve persistent risks of AI that are driving skepticism — The AI trust gap can be understood as the sum of the persistent risks (both real and perceived) associated with AI; depending on the application, some risks are more critical. The Harvard Business Review dives into the list and explains them all.

Feature Story

Retrieval Augmented Generation (RAG) To Reduce LLM Hallucinations

One of the biggest fears when using LLMs is hallucinations. Hallucinations refer to instances where large language models (LLMs) like GPT 4o or Claude’s Opus 3 produce coherent and grammatically correct outputs but are factually incorrect or nonsensical.

This is a bad descriptor as it anthropomorphizes LLMs (making them sound more like humans when they are not), but what is happening is that the LLM lacks an adequate amount of data to make a good prediction of the answer.

The prevalence of hallucinations in LLMs is estimated to be as high as 15% to 20% for GPT-3.5 and is going down. However, it is still a huge concern, and it can have profound implications for a company's reputation and the reliability of AI systems.

Reducing Hallucinations with RAG

Retrieval-augmented generation, or RAG, might have a quirky ring to it, but the odd acronym is the method we use to integrate enterprise data with generative AI. Simply put, we combine external data stored in special (vector databases) databases with our query to a large language model to ground the model better and get better results.

LLM grounding connects language models with real-world knowledge to improve accuracy and relevance. This is crucial for ensuring the models understand and respond appropriately to user queries. LLM grounding, common-sense or semantic grounding, helps language models understand domain-specific concepts by linking linguistic expressions to real-world data.

It enables more accurate responses, reduces hallucination issues, and decreases the need for human supervision during interactions.

Overview of Retrieval-Augmented Generation (RAG)

Retrieval-augmented generation (RAG) is a technique in natural language processing that combines information retrieval with language generation. This approach provides precise and informative answers to user queries by leveraging a combination of database searches and advanced language models.

When a user asks a question, such as "What is the tallest mountain in the world?" the system starts by retrieving relevant information from a database. The retrieved data is then used by the language model to generate a comprehensive and accurate answer. This combination of retrieval and generation ensures the response is factually correct and contextually rich.=

The key components of RAG include embeddings, vector databases, and language models. Embeddings are numerical representations of words or sentences that capture their meanings in a format that computers can understand. For instance, words like "cat," "dog," and "fish" are each represented by unique sets of numbers, allowing the system to understand the relationships and similarities between different words.

Vector databases store these embeddings and enable quick retrieval of similar information. When a user searches for a term like "furry pet," the vector database can efficiently find related entries such as "cat" and "dog" because their embeddings are similar. This retrieval process is essential for providing timely and relevant answers.

Language models like GPT 4o can understand and generate human language. After the relevant information is retrieved from the database, the language model uses this data to construct an informed response. This dual approach of combining retrieved data with the language model's generative capabilities results in accurate and contextually appropriate answers.

RAG enhances accuracy by grounding responses in reliable information from databases. By generating contextually enriched responses, RAG ensures that users receive correct and well-explained answers. This makes RAG useful in applications such as customer support and educational tools.

For example, if a user asks, "What are the benefits of exercise?" the RAG system first retrieves relevant articles and studies about exercise. Then, it generates an answer like, "Exercise improves your health by strengthening your heart, increasing flexibility, and reducing stress." This response is accurate, detailed, and easy to understand, showcasing the power of RAG in delivering high-quality information.

Currently, RAG is the most common technique for improving natural language processing by combining information retrieval and language generation. It ensures users receive accurate, contextually relevant, and well-explained answers to their questions, making it a valuable tool in various applications.

Retrieval Augmented Generation (RAG): Enhancing LLM Results for Enterprises

RAG uses a retriever model to find pertinent documents from a large corpus and a generative model to produce coherent and accurate responses based on the retrieved information. This approach ensures that the AI's outputs are linked to real-world knowledge and contexts, resulting in more controlled and accurate responses.

Studies have shown that using RAG with advanced language models like GPT-4 can increase the factual correctness of responses by 13% compared to using the language model alone. RAG enables AI to be tailored to specific business content, including vectorized documents, images, and other data formats, provided embedding models are available.

Vectara, a RAG-as-a-service provider, maintains a Hallucination Leaderboard based on the Hughes Hallucination Evaluation Model. A model they have come up with to provide a measure of how likely a model is to hallucinate.

Key Components of RAG

Retrieval-augmented generation (RAG) involves two main components: a retriever that identifies relevant information from a corpus, and a generator that crafts coherent responses. The query and document encoders transform inputs and documents into vectors to enable efficient and accurate retrieval. These vectors are stored in an index for quick access.

Embeddings: Numerical representations of words or sentences that capture their meanings, allowing the system to understand relationships and similarities between words.

Vector Databases: Store embeddings and enable quick retrieval of similar information based on user queries.

Language Models: Understand and generate human language using retrieved data to construct informed responses.

Retriever Models: Fetch relevant documents or passages based on a given query, employing vector representations of text to identify the most pertinent information.

Frameworks: Llamaguard and Langchain provide tools and infrastructure to build and deploy RAG systems securely and efficiently.

Implementing RAG

The process of implementing a RAG system involves several steps:

Preprocessing: Tokenizing, cleaning, and generating embeddings from text data using pre-trained models.

Storage: Storing embeddings in vector databases like MongoDB Vector Search, Milvus, or Weaviate.

Retrieval: Using retriever models like DPR to find the top-k relevant documents based on query embeddings.

Generation: Passing retrieved documents to a generative model like GPT-3 to produce the final response.

Integration: Managing the workflow using frameworks such as Llamaguard or Langchain for secure and efficient deployment.

Impact on Enterprises RAG is a cost-effective solution for businesses, allowing them to introduce new, domain-specific information to their AI systems without retraining models. This makes generative AI technology more accessible and applicable across various industries. RAG also ensures the security and reliability of enterprise data, giving businesses confidence in leveraging the technology safely and effectively.

Security and Reliability

With RAG, enterprises don’t have to worry about the security and reliability of their data. The architecture is built to ensure that the information retrieval system not only returns relevant results but does so with the utmost consideration for data protection and operational stability. This means businesses can leverage RAG with the confidence that their proprietary content is being used safely and effectively.

The Future

Retrieval-augmented generation (RAG) is currently the most accepted method for bridging the gap between generative AI and enterprise data. RAG is the most effective approach and is not expected to be replaced soon. Over the long term, smarter architectures may evolve and eventually outperform RAG.

Prompt of the Week

How to Use ChatGPT's Memory Function

ChatGPT's memory function allows for more personalized interactions by remembering information between sessions.

1. Enabling Memory

Initial Setup:

Log in: Sign in to your OpenAI account via the ChatGPT app or web interface.

Access Settings: Navigate to the settings menu in the upper right-hand corner (the new ChatGPT interface shows it as a white diamond in a blue circle).

Enable Memory: Find the memory settings and toggle the option to enable memory. You might need to grant permission to store personal data.

2. Using Memory Effectively

Providing Initial Information:

Clear Input: Specify what you want the AI to remember. For example, “Remember that I am an AI consultant specializing in trends in AI and productivity for business users.”

Contextual Use: Use memory to maintain context across sessions. For instance, “Remember that I often discuss enterprise AI solutions with C-level executives.”

Updating Information:

Revisions: Update stored information as your needs change. For example, “Update my role to include my recent experience as a design director for online media.”

Deletions: If information becomes irrelevant, instruct the AI to forget it. For instance, “Forget that I am focusing on open source software this quarter.”

Practical Scenarios:

Ongoing Projects: “Remember that I am working on a project about AI-driven business analytics for the next two months.”

Preferences: “Remember that I prefer responses to be segmented into clear, actionable steps.”

3. Advanced Tips for Expert Users

Optimizing for Business Use:

Detailed Scenarios: Provide detailed information for recurring topics. For example, “Remember that in my blog posts, I target mid-level to C-Level executives in enterprise AI.”

Regular Updates: Keep the AI updated with your evolving business environment and projects to maintain relevance.

Data Management:

Privacy Considerations: Do not store sensitive or proprietary information that could violate company policies or confidentiality agreements.

Periodic Audits: Regularly audits the stored information to keep it current and relevant.

4. Best Practices for Memory Use

Clarity and Brevity:

Concise Instructions: Keep your memory instructions clear and concise to avoid confusion.

Regular Check-ins: Periodically ask the AI to recount what it remembers to ensure accuracy, e.g., “What do you remember about my current focus areas?” You can always see your memories in the ChatGPT settings.

Consistent Updates:

Dynamic Adjustments: Update the memory with significant changes in your role or projects, e.g., “Update that I am now also focusing on AI ethics in enterprise solutions.”

By effectively leveraging ChatGPT's memory function, you can create a more personalized and efficient interaction experience, making your AI consultancy work smoother and more impactful.

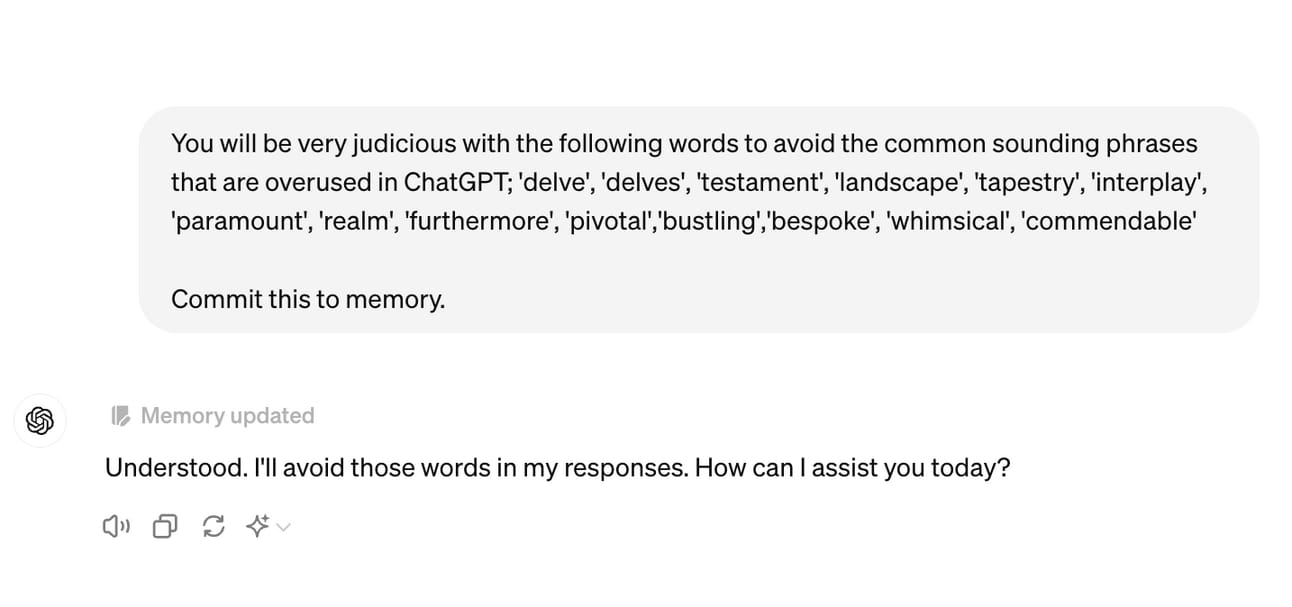

Here’s an example of a helpful prompt. I hate that ChatGPT uses words and phrases that I find unnatural.

You will be very judicious with the following words to avoid the common sounding phrases that are overused in ChatGPT; 'delve', 'delves', 'testament', 'landscape', 'tapestry', 'interplay', 'paramount', 'realm', 'furthermore', 'pivotal','bustling','bespoke', 'whimsical', 'commendable'

Commit this to memory. You should get the following general response to confirm this will no longer happen.

I appreciate your support.

BONUS CONTENT: The AI Boost

Suno: AI-Powered Music Creation

Suno.aI interface

Suno.ai is an AI-powered music creation platform that enables anyone to create original songs from a text description, even without musical experience or knowledge. Launched in December 2023 by Suno, Inc., the platform has quickly gained popularity due to its ease of use and impressive results. You can use Suno to create music you can use for all sorts of

Key Features and Highlights:

Text-to-music generation: Users input a text prompt describing the desired song, and Suno's AI generates the music, including vocals and instrumentation.

Genre and language flexibility: Suno can create songs in various genres and languages, catering to diverse musical preferences.

Customization options: Users can control the length of their songs, choose between instrumental or vocal versions, and adjust various musical elements.

Sharing and downloading: Suno allows users to share their creations directly on the platform or download them for personal use.

Suno.ai is a revolutionary tool that democratizes music creation by making it accessible to everyone. Its innovative approach and user-friendly interface have garnered widespread attention and praise, solidifying its position as a leader in AI-generated music.

Here’s a rap I made in Suno to give you an idea of its capabilities. It was a one-shot prompt, but I realized that you need to make some misspellings for it to get the pronunciation correct.